RESEARCH

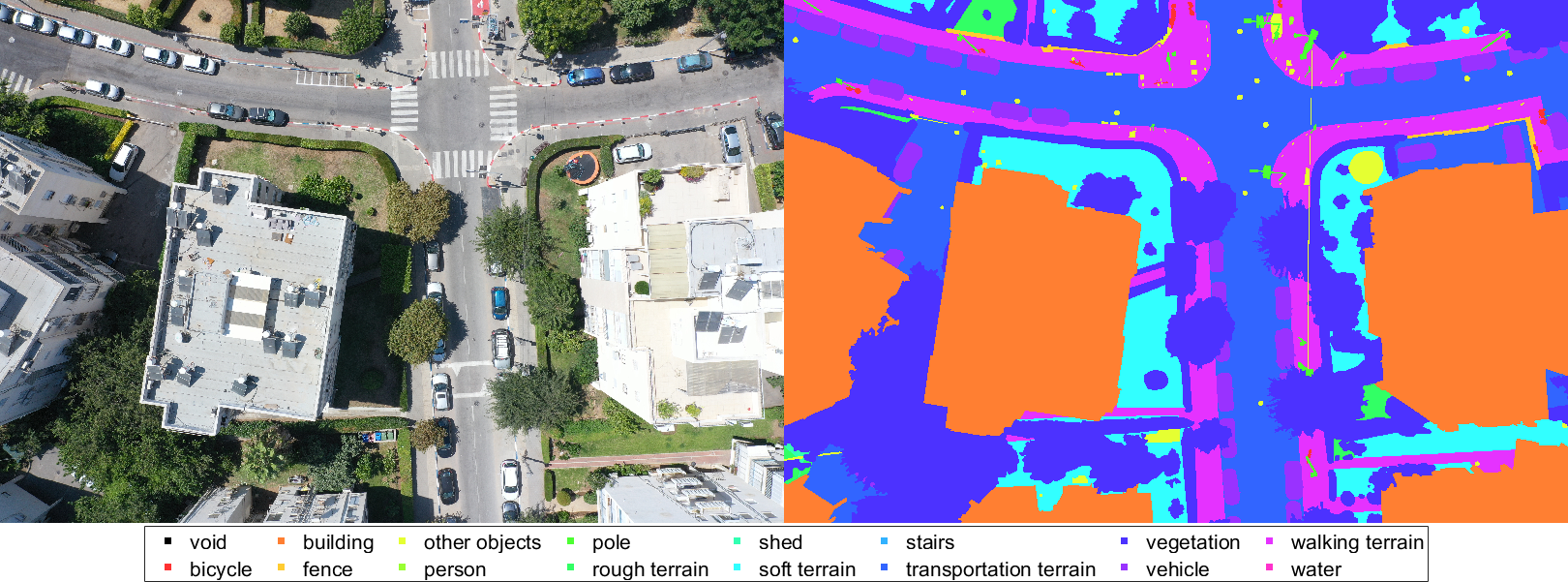

The research investigates a probabilistic multiple-resolution approach to vision-based aerial search in a dense urban environment. Several new ideas are explored, including a coverage planning algorithm subject to energy or time constraints, the advantage of conducting an iterative process at different altitudes, and semantic segmentation as a detection “sensor” for the search problem.

In order to validate the overall approach and test the new algorithms, a new and comprehensive dataset was built and made available to the general research community. Please follow this link to the dataset download page.

We are at the forefront of cutting-edge research in computer vision, focusing on the intricate domains of semi-supervised learning and noisy label environments. Our work delves into the complex challenges posed by incomplete annotations and unreliable labels, developing innovative approaches to enhance the robustness of computer vision systems. By addressing the nuances of real-world data, we aim to create models that can adapt and learn efficiently even when faced with imperfect or limited labeled information. Our commitment to pushing the boundaries of semi-supervised learning and navigating the intricacies of noisy labels underscores our dedication to advancing the field of computer vision and ensuring its practical applicability in diverse and challenging scenarios.

Terrain-aided navigation (TAN) was developed before the GPS era to prevent the error growth of inertial navigation. TAN algorithms were initially developed to exploit altitude over ground or clearance measurements from a radar altimeter in combination with a Digital Terrain Map (DTM). After almost two decades of silence, the availability of inexpensive cameras and computational power and the need to find efficient GPS-denied positioning solutions have prompted a renewed interest in this solution. However, vision-based TAN is more challenging in many aspects than the original one, as visual observables can only provide a range up to a scale, preventing a straightforward extension of classical TAN techniques.

The main contributions of this work are the introduction of a new, more flexible, and efficient algorithm for solving the visual-assisted TAN. The algorithm combines two fast stages for solving the problem. In addition, a new outlier-rejection step is introduced between the two stages to make the algorithm robust and suitable for real-world data.

MESSI: a Multi-Elevation Semantic Segmentation Image dataset comprising 2525 images taken by a drone flying over dense urban environments, containing images from various altitudes to investigate the effect of depth on semantic segmentation. MESSI also includes images taken from several urban regions (at different altitudes). This is important since the variety covers the visual richness captured by a drone’s 3D flight, performing horizontal and vertical maneuvers. Each image is also provided with its location, orientation, and the camera’s intrinsic parameters, making it a valuable resource for training deep neural networks for semantic segmentation. Its applications extend beyond this, making it a versatile asset for various research interests.